Google's Deep Think Rethinks How You Think

This Week in Products, Google has released one of its most advanced reasoning models yet, capable of parallel thinking. What does this multi-agent system unlock?

Google has rolled out Deep Think in the Gemini app to all Google AI Ultra subscribers. Deep Think is Google’s first publicly available and one of the most capable and advanced reasoning models.

Earlier, Google had also shared that the official version of Gemini 2.5 Deep Think (a research-only version) recently achieved the gold-medal standard at this year’s International Mathematical Olympiad (IMO). Designed for everyday utility, the public version of Deep Think meets the “bronze standard” in reasoning benchmarks.

This reasoning model functions with a “parallel thinking mode,” where the system spawns multiple AI agents to tackle a query, and while it’s a process that may use significantly more computational resources than a single agent, the results show more nuanced and complicated answers

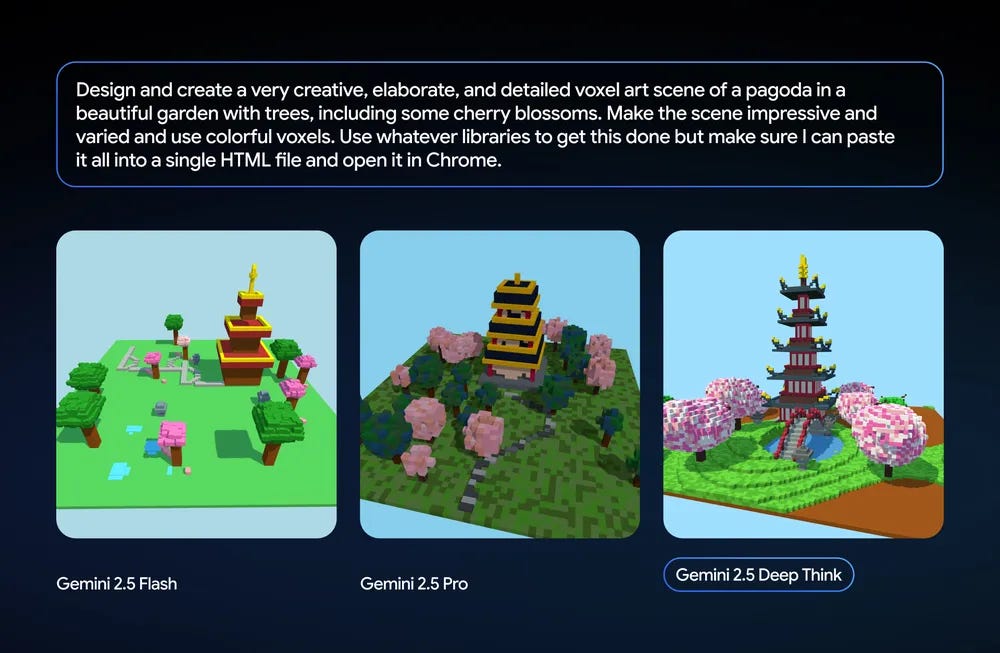

Here’s a glimpse into what the parallel thinking mode could do for you 👇.

Google believes Deep Think to be a “Powerful tool in creative problem solving,” and This Week in Products, we explore what it means.

What Makes Parallel Thinking Different?

Most generic AI models work pretty simply. You type in your prompt, and they give you an answer to that one idea at a time. Let’s say you ask a generic LLM how to improve your app’s onboarding. The model might just give you a reply like “make it simpler with fewer steps,” and leave you with the work that still requires “you” to think.

Deep Think doesn’t take that route. The model reasons and “thinks” for you in parallel with multiple side-by-side perspectives of looking at the same problem.

How does it do that? It spins up different agents to explore the question from multiple angles. One looks at user psychology, another looks at design patterns, a third digs into friction points, and another checks what top apps are doing. Then it compares all those angles and pulls them together into a sharp, thoughtful response.

The best way I could imagine it is while generic LLMs that tread the water and give you a brief of everything that has ever existed, Deep Think would literally scuba dive its way to the deepest corners of a problem and pull up with an answer that’s “thought out.”

Deep Think, just like the name suggests, thinks deeply. And Google’s not the only one chasing this kind of multi-agent setup.

Elon Musk’s xAI has launched Grok 4 and Grok 4 Heavy, built on a new multi-agent architecture designed for more complex reasoning. The company also introduced SuperGrok Heavy, a $300/month premium plan offering early access to the Heavy model. xAI claims Grok 4 achieves PhD-level performance and leads on benchmarks like Humanity’s Last Exam, especially when tools are enabled.

Anthropic has developed a multi-agent architecture where a lead agent coordinates several autonomous subagents to tackle complex research queries in parallel. Each subagent uses tools independently, enabling broader and faster exploration. The system outperformed single-agent setups by over 90% on breadth-first tasks.

How Deep Think Reasons?

Deep Think takes a few extra seconds, called “inference time,” which is thinking time. It uses that time to run multiple lines of reasoning in the background so that the answer you get is layered and more useful.

And here’s the best part. Google has fine-tuned the model for reinforcement learning. Now, what does that mean?

Google has simply trained the model similar to how product iteration cycles work, but this time it’s for “thinking” by letting it explore different reasoning paths, then giving it feedback on which ones led to better answers.

The model is trained to not just think deeply, but think better over time. Instead of only answering the hows very well, it also reasons on whys at all in the first place.

So what can Deep Think do for you?

🎨 Iterative Development

You can start with a rough draft, say, a basic layout or form of a product, and as you iterate, Deep Think can help you refine by suggesting better structure, improving usability, and even spotting design inconsistencies that could affect user experience.

This makes it especially powerful for teams working on frontends, dashboards, or tools where visual clarity and logic need to evolve together.

💡 Algorithmic Thinking

Deep Think is especially strong in algorithm-heavy tasks where success depends on how well the problem is framed and how smartly trade-offs are made. The mode can walk through the logic, explain why one path is better, and then implement it cleanly.

This makes it ideal for use cases like building core logic for apps, optimizing performance-critical code, or tackling technical interviews and puzzles where how you solve matters just as much as the result.

The Big Picture

🧠 Not a Tool. A Thinking Partner.

Until now, AI has mostly acted like a supercharged assistant that’s fast, efficient, and helpful. But still reactive. You ask, it answers. You point, it executes, but what Deep Think signals isn’t another leap in speed or scale.

It’s a shift in how we work as it’s here to think with you. It changes the role of AI from a productivity hack to a strategic partner.

That’s the real shift: from AI that helps you move fast, to AI that helps you move forward with clarity. Not a co-pilot. A co-thinker.

📰What’s going around tech?

News That Matters for Product Leaders

Reddit CEO wants to take on Google with unified search

Reddit CEO Steve Huffman has announced a plan to merge Reddit’s regular search with its AI-powered Answers feature. The update puts a new unified search bar front and centre in the app, aiming to improve discovery and make Reddit a true search destination. With growing user interest in human-sourced answers, Reddit hopes to challenge Google’s dominance in everyday search.

Read More→

Meta lets candidates use AI during coding tests

Meta is now allowing some job candidates to use AI tools during coding interviews, reflecting how developers work today. The company is also running mock AI-assisted interviews with employees to test the new process. The move aims to make technical assessments more realistic and reduce unfair advantages from hidden AI use, while also gathering feedback on how AI changes candidate behaviour and performance.

Read More→

OpenAI’s GPT‑5 could arrive on Microsoft Copilot alongside ChatGPT

Reports indicate that Microsoft is testing a new “Smart” mode in Copilot powered by OpenAI’s upcoming GPT‑5, potentially rolling out on the platform as soon as the model is available on ChatGPT. The feature, spotted via internal previews, is designed to eliminate manual mode-switching by dynamically selecting deep or faster AI reasoning based on task complexity. OpenAI is expected to release GPT‑5 in August 2025.

Read More→

Google won’t deactivate all goo.gl links after all

Google has reversed its decision to disable most goo.gl shortened links. Initially set to go inactive by August 25, only links flagged for long-term inactivity will now be affected. Active goo.gl URLs will continue working. The change comes after user feedback raised concerns about broken links in documents and websites. Google had stopped generating new goo.gl links back in 2019.

Read More→

Google bets on STAN, an Indian social gaming platform

Google has invested in STAN, an Indian-origin platform that combines gaming, creators, communities, and publishers in an $8.5 million equity round via its AI Futures Fund. Backers also include Bandai Namco, Square Enix, and Aptos Labs. STAN lets users earn in-app “Gems” from games like Free Fire and Minecraft, use them to join creator-run “Clubs,” and redeem them for vouchers on Amazon, PhonePe, and Flipkart

Read More→

A video I found insightful

AGI is Closer to Us Than We Think it is

Demis Hassabis, the CEO of Google DeepMind, has a front-row seat to one of the most fascinating shifts in tech, the journey toward artificial general intelligence, or AGI. While He’s doesn’t claim that AGI is right around the corner, he does think there’s a 50-50 chance we’ll get there in the next ten years.

The way he sees it, we’re racing toward something we’ve never really seen before: machines that can think and reason more like us.

He views it as a societal transition, something that’s more likely to happen gradually than all at once. In his words, it’ll unfold “incrementally,” not like flipping a switch overnight. Infrastructure, institutions, and human behaviour take time to catch up, highlighting the shift to something as significant as the Industrial Revolution.

Maybe even bigger.

He talks about the future of work with a calm, grounded take. Yes, there’s going to be a disruption. But like every big shift before this, AI could also create new kinds of work, especially if we make sure to keep aspects such as empathy and creativity at the center. And if you’re a student today, he says, don’t wait. Start learning how to prompt, how to fine-tune, how to build, and how these tools actually work.

Still, even as he talks about a future of “radical abundance” where AGI helps us solve for energy, health, and maybe even space, there’s a quiet skepticism about whether we’ll share those gains fairly. The tech might be capable. The real question is whether we are.

Watch the video for more insights

📬I hope you enjoyed this week's curated stories and resources. Check your inbox again next week, or read previous editions of this newsletter for more insights. To get instant updates, connect with me on LinkedIn.

Cheers!

Khuze Siam

Founder: Siam Computing & ProdWrks